In this post I will give a pattern for reading a csv file form SharePoint.

An example csv file

Table of Contents

For this post I created a csv file with 3 columns and two rows of data.

I uploaded this csv file to SharePoint, ready to be read by my Flow.

Newline

Later on we will use the output from a compose action with just a new line.

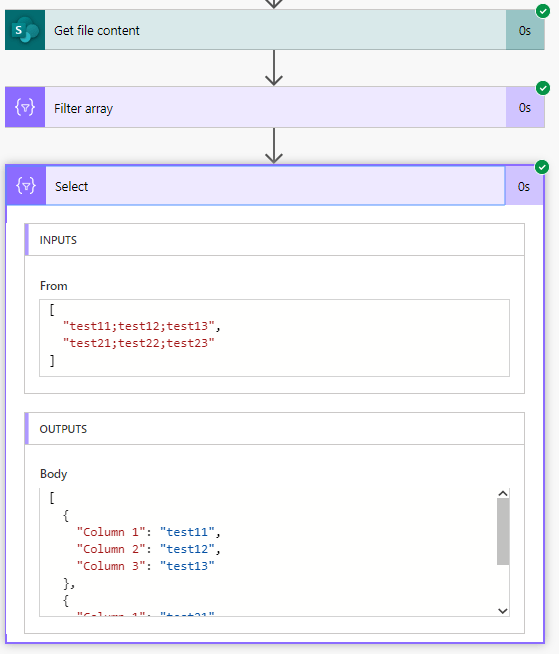

The flow to read csv files

After I built my flow I ended up with a json object in my flow that contained all the information from my csv file.

Only 3 steps needed to complete this task.

How to read a csv file from SharePoint.

This is the easy part. The get file content action will do this for you.

See there, we have $content! Ready to be converted to json.

Convert the CSV file to json

Ok, this is the tricky part.

The Filter Array action does two things. The obvious bit is that I want to filter out the empty lines as there is no need to process these.

The the From setting is where I’m turning the $content into an array of data that can be filtered.

json(

uriComponentToString(

replace(

replace(

uriComponent(

split(

replace(

base64ToString(

body('Get_file_content')?['$content']

),

',',';'),

outputs('Newline')

)

),

'%5Cr',

''),

'%EF%BB%BF',

'')

)

)The base64ToString function turns the weird looking characters in $content into something that already looks like the data in my csv.

base64ToString(

body('Get_file_content')?['$content']

)Then the replace around that replaces my commas with semi colons. The reason why I’m doing this is because the commas may cause some problems within my csv data.

replace(

base64ToString(

body('Get_file_content')?['$content']

),

',',';'),Then in the rest of the large expression I’m removing some of the junk that excel adds to csv files. (‘%EF%BB%BF’ and ‘%5Cr’)

You will also have noticed the outputs(‘Newline’). This is referring to a compose action in which I just pressed enter.

The final step

The final step of the flow has again 2 reasons.

- The first line of csv files contains headers rather than data and should be ignored.

- turn the column names into json labels.

In one of my favourite actions in Flow, the select action, I will use to skip the headers, the following expression:

skip(body('Filter_array'),1)Ok, that was easy!

split(item(),';')[0]

split(item(),';')[1]

split(item(),';')[2]If you are interested in a single step then you could also up vote the idea on the forum. Although when only 3 steps are required to read rows form a CSV file, there will hardly be a benefit 😉

Large CSV files

This all worked quite well until some people started complaining about larger CSV files. When you use larger csv files you may get the following error

InvalidTemplate. Unable to process template language expressions in action ‘Filter_array’ inputs at line ‘1’ and column ‘6808’: ‘The template language function ‘uriComponent’ was invoked with invalid parameters. The parameter at index ‘0’ cannot be converted to URI component.’.

So what does that mean?

the uriComponent function is complaining that it is getting invalid data. The error message is even giving us the clue where to look! The first parameter is wrong ( See the error message at index ‘0’)

uriComponent(

split(

replace(

base64ToString(

body('Get_file_content')?['$content']

), ',',';'

),

outputs('Newline')

)

)When I added the above expression to a compose action, I got the following output when my file exceeded 2622 lines. It all still looked the same as when I had less lines. The empty line is there because I edited the document in Excel and there is an additional line saved, but that is not a problem.

So what is the problem?

It looks like the uriComponent functions can only handle so much data. I reduced my file back to 2621 lines and my flow is successful. No I’m adding an extra column and the same error message appears.

This also explains why some of my followers mentioned a problem after a few thousand rows and some others mentioned the problem after 300 rows. It all depends on the data.

So how do we resolve this?

The easiest way is probably to split the csv file into smaller chunks. So that once we have read it and converted it from base64 to string and split it by the new lines then we could take a set number of lines from the csv file. But this number will depend on the width of the csv file.

To do this the following code could be added to a compose actions and then section could be added

split(

replace(

base64ToString(

body('Get_file_content')?['$content']

),

',',';'),

outputs('Newline')

)Then using the take function the first so many items could be collected and processed and the using the skip function the second part of the csv file could be handled afterwards.

Handling simple CSV data

This expressions that I just mentioned could also be used by itself.

split(replace(base64ToString(body('Get_file_content')?['$content']), ',', ';'),outputs('Newline'))resulting in the following flow:

Now the limit of the number of lines has gone and potentially we could now do the cleanups in each of the Columns in the Select action.

Thanks Pieter, this is awesome – just what I’ve been looking for! Thought I was going to need to use a third-party service for this.

One thing – it looks like there’s a reference to an action called “Newline” in the main expression, which currently doesn’t appear in your article above. I found that I if I added a Compose action renamed to “Newline” just above the “Filter array” action, and typed a single carriage return into the action’s “Inputs” field, it made everything work a treat.

Hi Dave,

That is exactly the way to do it and the way I did it. For some reason replacing \n or \\n doesn’t work.

Hello, very helpful. Can you share the full flow with us because I couldn’t reapply it. Thanks !

How far did you get? Where did you get any errors? Please feel free to share you experiences.

I am getting such error on filter array using your code: Unable to process template language expressions in action ‘Filter_array’ inputs at line ‘1’ and column ‘3308’: ‘The template language function ‘uriComponent’ was invoked with invalid parameters. The parameter at index ‘0’ cannot be converted to URI component.’.

That sounds like you are getting the first element of an empty array. You might want to check the data that you are pushing into the Array Filter.

Hello,

Data is there, I checked. Issue lies in split, it just doesn’t catch it. I have even converted data so \r\n is replaced by |. So i do

json(

uriComponentToString(

replace(

replace(

uriComponent(

split(

outputs(‘ConvertEOL’),

‘|’)

),

‘%5Cr’,

”),

‘%EF%BB%BF’,

”)

)

)

Where outputs looks like: “column1;column2|data1;data2|data3;data4|..etc”

I need help after the get file content. I add the filter array but when I execute the flow it gives error “The value must be of type array.”?

Hi Sherwin,

I would take the input that you are using for the Filter Array action and put it into a compose action. Then run the flow and see what the data is that you get? If this isn’t an array or if there isn’t any data then slowly take away bits of the expression and check where the unexpected data processing is happening.

Hi, great method, and thanks for sharing, I have a couple of questions though. in the section Convert the CSV file to json, it has an item expression in the value field (where the value is not equal to null), can you confirm what that need to be, I have tried to enter item() but my flow keeps turning it into triggerbody(item) instead of this item? Also I am getting the error “Unable to process template language expressions in action ‘Filter_array’ inputs” and have tried to implement the fix mentioned in the comments but getting nowhere, my data is fine, I dont have any blanks or blank rows or anything the I can confirm that the outputs show exactly what I was expecting. Help?

Hi Rob,

The advanced expression should be

@not(equals(item(), ''))I think that when you get the template language expression error that there is something wrong with the input of the filter action. this could for example happen when the input contains characters important to json itself. e.g. ” or @ or anything else that json might see as a special character. I would need to look at the actual data to get a better feel for that problem.

Hi Pieter, thanks for that, I have updated the item expression and I think that is OK, although it has the wrong icon on it!

This is from the exchange report export and after I have edited to ; delimited and | instead of returns Im left with something that looks like this:

“Report Refresh Date;User Principal Name;Display Name;Is Deleted;Deleted Date;Created Date;Last Activity Date;Item Count;Storage Used (Byte);Issue Warning Quota (Byte);Prohibit Send Quota (Byte);Prohibit Send/Receive Quota (Byte);Deleted Item Count;Deleted Item Size (Byte);Report Period\r|2020-06-06;arcnsm@hants.gov.uk;Martin, Shaun;False;;2018-04-27;2020-06-06;170885;101752206840;105226698752;106300440576;107374182400;52846;12081470886;30\r

from what you said, I’m wondering if either the \r or @ symbols in the email are causing issues, I dont really know how to remove the \r, I have to use

replace(replace(body(‘Get_file_content’),decodeUriComponent(‘%0A’),’|’), ‘,’, ‘;’)

to get rid of the \n in the first instance. Then the next question would be how to translate the @ symbol so it can be used? Is there something in the header row thats causing a problem? Maybe I need to delete the headers?

You could replace the @ with an @@ so that it is escaped. These problems can be a real pain.

If you need any detailed help we can have a chat about making this work. please feel free to hit the chat button on this site.

Hi Pieter, I got it to work in the end, as my csv was not being generated by Excel I removed the uricomponent / uricomponenttostring sections. In order to diagnose the issue more effectively I also broke each step down into separate steps

Just to let you know as well you can remove those special characters with encoding the whole string to URIsafe, you can do another

replace(body(‘Get_file_content’)?[‘$content’], decodeuricomponent(%EF%BB%BF), ”)

There are a couple of things you could add here that might be helpful, how to convert that array back into an object so we can use parseJSON, also I find with these that once done, in order to do more with it, you need to push it through an apply to each command, which in most cases will be locked down to a maximum of 5000 rows.

Just to finish, this post has been exceptionally useful and your help on chat was very valuable, thank you Pieter for your help with this and if you need anything, let me know!

Hi Rob,

Thank you for your comments and feedback.

It is great to hear that you got it to work at the end.

In general I skip the parse json other than when I use parse json to validate data. But I agree that it can help make flow development just that little bit easier.

Hi Rob,

I finally got to review this post. That would also avoid the issue of large csv files that I just updated the post with.

Correct to include a valid reference to ‘Newline’ for the input parameter(s) of action ‘Filter_array’. I’m getting this error with filter array step. can you advise ? I have only 2 columns with my excel name and group.

I’ve added the new line explanation in the post now.

I’m also getting same error “Correct to include a valid reference to ‘Newline’ for the input parameter(s) of action ‘Filter_array’.” could you help me

Hi, I added that earlier today. Just add a compose anywhere in your flow and press enter in it.

While Reading CSV using flow what if my column has content with comma how to handle that

There isn’t really a great way around that, but what you could do it replace ‘”,”‘ with ‘”###”‘ before processing your csv. Now your csv separator is ### and the commas in the csv data will be processes as you want.

Hi Pieter,

Your solution looks fantastic! Life Saver indeed.

I followed into my solution, but I am getting error on Select action as-

failed: ‘The template language expression ‘split(item(),’;’)[1]’ cannot be evaluated because array index ‘1’ is outside bounds (0, 0) of array

Hi Shri, any empty lines in your csv?

Yes that was the catch, thanks very much 🙂

I am having issues w/ commas as well and saw your resolution. The csv file I’m using isn’t clean data and there are commas and line breaks within the data. Is your resolution for the comma issue with replacing ‘,’ commas w/ something else ‘###’, being done outside of power automate? I would like to automate everything but it looks like w/o regular expressions, getting the commas out of the csv data isn’t possible w/ flow.

How about replacing “,” with “###” so including the double quotes that you find in the csv? Or does your csv not have double quotes around the data?

I’m SO glad you posted this question about the empty lines. This has been driving me nuts!

Pieter, thanks for this tutorial, it really solves the issue in at least 5 less steps then others 🙂

However, I am now facing a problem that resulting data from parsing has replaced all the Unicode characters (accented letters) from CSV with “�”. Any suggestions?

You should be able to replace those characters with nothing. They are basically non visible characters.

I know I am able to do it, but I need them to stay in original. Those characters are necessary in my language, so if five different of them are always replaced with that single symbol, the output data is of no use for me 🙁

My bad, Pieter… CSV is generated from some DOS application that doesn’t save the encoding info with the file. Sorry for the confusion and keep up the good work of helping the Power Automate community.

Hello,

I seem to be struggling with this. I get this error: Unable to process template language expressions in action ‘Filter_array’ inputs at line ‘1’ and column ‘14697’: ‘The template language function ‘split’ expects its second parameter to be of type string. The provided value is of type ‘Object’. Please see https://aka.ms/logicexpressions#split for usage details.’.

also where do you get the item{/} to add in the Filter array? I only have Outputs as an option.

I was getting the Newline error but I added a Compose above the filter array. Was that correct.

Thanks,

Craig

Hi Craig,

That error means that there is something wrong within the Filter Array action. Where you are using the split function the split isn’t generating an array as the split function isn’t given a second parameter that looks like this: ‘,’

What is the second parameter in your split function set to?

Potentially you could add a compose action before the fitler array action that just contains that split function. It will make the error messages less cluttered.

Thank you Pieter. I continue to be awed by your clever workarounds and this general approach worked great for me, once I understood your logic. Please keep on posting.

Thanks Martin!

Hi Pieter,

Great guide, thanks for this. But I am having some problems. My CSV entries contain special characters:

– I have URLs, for example using ‘:’ ‘/’ ‘.’ ‘_’

– Timestamps using ‘-‘ ‘:’

– And money values values using ‘,’ so how would I handle these when parsed into JSON so I can keep them on the otherside?

What are the ways around this?

Also, separately, my Filter Array fails with the following error:

“Unable to process template language expressions in action ‘Filter_array’ inputs at line ‘1’ and column ‘27756’: ‘The template language function ‘json’ expects its parameter to be a string or an XML. The provided value is of type ‘Array’.”

Your JSON references output(‘Newline’), is there a predefined variable I am missing?

When you use characters that can cause problem, you will need to look at escaping these. See also my post about apostrophes.

https://sharepains.com/2019/10/07/handling-apostrophes-in-microsoft-flow/

Can you post the json expression that you are using? Something must be different from my example.

The Output(‘Newline’) requires a compose action that has a new line in it. This compose action will need to be renamed to Newline

I think I am having problems with my base64ToString formula: it doesn’t seem to actually convert properly which is causing the issue:

https://imgur.com/a/bvGysFz

https://imgur.com/a/xtz5T49

What in there have I got wrong?

Hi Maxim,

That all looks ok, but I can see that the conversion isn’t happening. Feel fre to hit the chat and we can have a more detailed look

Hi Pieter,

I have been trying with the CSV read. When I read a CSV of 500 lines the code works smoothly. However when I increase number of lines to 1,000, I get the error message: “InvalidTemplate. Unable to process template language expressions in action ‘Filter_array’ inputs at line ‘1’ and column ‘6302’: ‘The template language function ‘uriComponent’ was invoked with invalid parameters. The parameter at index ‘0’ cannot be converted to URI component.’.” Not sure what setting I need to change to increase the number of lines that the flow can read?

Hi Pieter,

I tried the flow above and it worked nice for files of 500 lines or less. WHen I tried it with 1000 lines, I get the error:

InvalidTemplate. Unable to process template language expressions in action ‘Filter_array’ inputs at line ‘1’ and column ‘6302’: ‘The template language function ‘uriComponent’ was invoked with invalid parameters. The parameter at index ‘0’ cannot be converted to URI component.’.”

Any ideas how I can use the logic for CSV files of large size?

I’m quite sure that this has worked for me in the past beyond 500 rows. How many columns are there in your CSV? Also how large is the file when you do have 500 lines?

There are 10 columns 389K rows and growing

One of the CSVs is 389K lines and 10 columns. However I haven’t even gotten to use that one. Only thing I did was duplicating the lines for the 500 rows to 1000 and all of a sudden the same file didn’t work anymore. When I would remove all the extra lines, it worked again

Hi Robert, if you open a chat on the site I can see if I can help you further

Hi Robert,

I have updated the post. I found a limitation of one of the functions used.

Hello,

I’m not at an advanced level for this. So please reply with simple detail.

We download the meeting attendance lists from Teams during the meeting. It produces a file called “meetingAttendanceList.csv”

However, this is the actual format of my excel csv file.

Full Name User Action Timestamp

“Binkley, Daniel” Joined “1/27/2021, 9:58:18 AM”

“Ostergaard, Kristina” Joined before “1/27/2021, 9:58:18 AM”

I have the following setup:

1. Get file content

2. Initialize Variable “Newline”

3. Filter array

question 1:

anytime I use the @ in the expression, I always get this error: “The expression is invalid.”

so I have to input not(equals(item(), ”)) for the filter array. Is this still going to work properly?

question 2:

here is my output from file content:

{

“$content-type”: “application/octet-stream”,

“$content”: “//5GAHUAbABsACAATgBhAG0AZQAJAFUAcwBlAHIAIABBAGMAdABpAG8AbgAJAFQAaQBtAGUAcwB0AGEAbQBwAA0ACgAiAEIAaQBuAGsAbABlAHkALAAgAEQAYQBuAGkAZQBsACIACQBKAG8AaQBuAGUAZAAJACIAMQAvADIANwAvADIAMAAyADEALAAgADkAOgA1ADgAOgAxADgAIABBAE0AIgANAAoAIgBPAHMAdABlAHIAZwBhAGEAcgBkACwAIABLAHIAaQBzAHQAaQBuAGEAIgAJAEoAbwBpAG4AZQBkACAAYgBlAGYAbwByAGUACQAiADEALwAyADcALwAyADAAMgAxACwAIAA5ADoANQA4ADoAMQA4ACAAQQBNACIADQAKAA==”

}

I copied your code exactly for the Filter array from.

I get this error:

InvalidTemplate. Unable to process template language expressions in action ‘Filter_array’ inputs at line ‘1’ and column ‘11374’: ‘The template language function ‘split’ expects its second parameter to be of type string. The provided value is of type ‘Null’. Please see https://aka.ms/logicexpressions#split for usage details.’.

If I remove the “replace comma with semicolon”, I get this error:

InvalidTemplate. Unable to process template language expressions in action ‘Filter_array’ inputs at line ‘1’ and column ‘11373’: ‘The template language function ‘base64ToString’ expects its parameter to be a string. The provided value is of type ‘Object’. Please see https://aka.ms/logicexpressions#base64ToString for usage details.’.

I’m completely frustrated. Please help.

Thanks,

Kristina

Hi Kristina,

The problem with the content is that it is in base64 format. You can convert this to text using the base64tostring function available in the expression editor.

If you need any help feel free to hit the chat on this page.

I thought that is what this code was doing?

json(

uriComponentToString(

replace(

replace(

uriComponent(

split(

replace(

base64ToString(

body(‘Get_file_content’)?[‘$content’]

),

‘,’,’;’),

outputs(‘Newline’)

)

),

‘%5Cr’,

”),

‘%EF%BB%BF’,

”)

)

)

Yes it is. However it looks like the base64 conversion might be failing. Can you create a compose action and just add the base64tostring( … ) part in there as an expression?

When I use this I get the below error:

base64ToString(

body(‘Get_file_content’)?[‘$content’]

)

The ‘from’ property value in the ‘query’ action inputs is of type ‘String’. The value must be an array.

Hey Pieter, thanks for this. I’ve been using this flow for a few months and just received an error. It was the …”cannot be evalutated because array index 4 is outside of bounds (0,3) of array.”

I looked through the filter array output (by adding a compose operation) and I see that one row is missing the second column, so I’m guessing that is causing the error. Any ideas on how to prevent this from failing?

Hi Tim,

You could use conditions to handle these kind of problems. In apply to each action you could use a condition step. In Select actions you could use an if function. in both cases check if the value is empty and then return and empty string.

Hi,

This is great and exactly what i’m looking for. But do you know a way to remove the empty line from the bottom of the CSV file in the same process?

In general I just make sure that the flows test for empty lines and then ignore those as that will also handle empty lines or malformed lines in the middle of my data

Hi am facing this error, can you plz tell what’s wrong

Unable to process template language expressions in action ‘Filter_array’ inputs at line ‘1’ and column ‘6186’: ‘The template language expression ‘json( uriComponentToString( replace( replace( uriComponent( split( replace( base64ToString( body(‘Get_file_content’)?[‘$content’] ), ‘,’,’;’), outputs(‘Newline’) ) ), ‘%5Cr’, ”), ‘%EF%BB%BF’, ”) ) )’ cannot be evaluated because property ‘$content’ cannot be selected. Property selection is not supported on values of type ‘String’. Please see https://aka.ms/logicexpressions for usage details.’.

The problem will be the content of the csv file. First find out which line the flow is struggling with and the identify the problem

Hi,

For the Split formula, “?” is missing.

Power Automate did not allow me to access the array element without “?”, I spent like 2-3 hours on this, without realizing the missing “?”.

split(item(),’;’)?[0]

split(item(),’;’)?[1]

split(item(),’;’)?[2]

I hope this will help someone else.

Best Regards,

Karthik

Hi Karthik,

I just create a flow with two compose actions.

The first one contained a sentence and the second one used the following expression:

split(outputs(‘Compose’),’ ‘)[0]

The flow ran successfully.

Then I used a select action which was taking an array of sentences and used the following expression successfully as well:

split(item(),’ ‘)[0]

I don’t know if CSVs taken from Excel are sometimes different, but that delimiter change to ; doesn’t look like it will work. That should just replace all commas, delimiter or natural in-data commas, to semicolons, so the parsing won’t work any better.

If anyone wants a template that doesn’t require chunking the data and that actually deals with in-data commas, check this page: https://powerusers.microsoft.com/t5/Power-Automate-Cookbook/CSV-to-Dataset/td-p/1508191

awesome article!

For the people with the “\r” issue

try this: split(replace(base64ToString(body(‘Get_file_content’)?[‘$content’]), ‘,’, ‘;’),decodeUriComponent(‘%0D%0A’))

ref: https://www.tachytelic.net/2021/02/power-automate-parse-csv/